In recent years, artificial intelligence (AI) has made incredible strides in various industries, from healthcare to finance, manufacturing to entertainment. Generative AI is one of the most groundbreaking subsets, but what is it? In this article, I will delve into generative AI, discussing its models, applications, and impact on our daily lives. I will also explore using OpenAI’s text-to-image technology and the generative adversarial network (GAN) framework.

Introduction to Generative AI

Generative AI is a rapidly growing subset of artificial intelligence that focuses on creating new, previously unseen data samples based on an existing dataset. Generative AI models can generate novel content that closely resembles the original data by learning the underlying patterns and structures within the input data. This ability to synthesize new data opens up possibilities for innovation and creativity across various domains, including text, images, music, and complex structures like molecules.

The primary goal of generative AI is to model the probability distribution of the input data. So what the heck does that mean? By learning this distribution, the generative models can sample new data points from it, generating new content.

Developing advanced deep learning techniques has significantly contributed to the progress in generative AI. Various generative models, such as generative adversarial networks (GANs), variational autoencoders (VAEs), and recurrent neural networks (RNNs), have demonstrated remarkable success in generating high-quality content across multiple domains. As a result, generative AI has become an essential tool in diverse industries, from art and design to drug discovery and materials science.

In addition to its creative applications, generative AI also has the potential to address challenges associated with data scarcity and privacy. By generating synthetic data that mimics the properties of real data, generative AI can augment existing datasets, enabling better model training and performance. Moreover, synthetic data can be used in place of sensitive data, preserving privacy and complying with data protection regulations.

In the following sections, I will delve deeper into the various generative AI models, their applications, and the impact of generative AI on society. Furthermore, I will explore the text-to-image technology developed by OpenAI and the generative adversarial network (GAN) framework that has revolutionized the field of generative AI.

Generative AI Models

In this section, I will discuss some of the most popular generative AI models, including generative adversarial networks (GANs), variational autoencoders (VAEs), and recurrent neural networks (RNNs).

Generative Adversarial Networks (GANs)

Generative adversarial networks (GANs) are a class of generative models that have gained significant attention due to their ability to generate high-quality, realistic data samples. Introduced by Ian Goodfellow in 2014, GANs have become a cornerstone of generative AI. They consist of two neural networks, a generator and a discriminator, trained simultaneously in a zero-sum game.

Generator

The generator is responsible for creating new data samples. It receives random noise as input and transforms it into a data sample that mimics the properties of the real data. The generator aims to create samples indistinguishable from the actual data, effectively “fooling” the discriminator into believing they are genuine.

Discriminator

The discriminator acts as a judge, evaluating the authenticity of the generator’s data samples. It receives both actual data samples and the generator’s output and must distinguish between them. The discriminator aims to identify whether a given sample is real or generated correctly.

Basic GAN Architecture. Source: Arxiv Vanity

Basic GAN Architecture. Source: Arxiv Vanity

Training Process

The training process of GANs is similar to a game between the generator and the discriminator. The generator seeks to create realistic samples to fool the discriminator, while the discriminator strives to improve its ability to distinguish between real and fake samples. As the two networks compete against each other, they both improve over time, resulting in the generation of increasingly realistic data samples.

The training process can be summarized in the following steps:

- Sample a batch of real data and a batch of noise.

- Generate fake data by passing the noise through the generator.

- Train the discriminator on the actual data (labelled as real) and the fake data (labelled as fake).

- Sample a new batch of noise and generate another set of fake data.

- Train the generator to produce samples that the discriminator classifies as real.

This iterative process continues until the generator produces samples nearly indistinguishable from the real data, and the discriminator can no longer accurately differentiate between the two.

Variants and Applications

Since their inception, GANs have been extended and improved upon through various modifications and architectures, such as conditional GANs (cGANs), progressive, growing GANs (ProGANs), and Wasserstein GANs (WGANs). These advancements have led to successfully generating high-quality images, text, music, and even 3D models.

GANs have found applications in a wide range of industries, including:

- Art and design: AI-generated art, style transfer, and image synthesis.

- Medical imaging: Synthesizing medical images, data augmentation, and anomaly detection.

- Computer vision: Image-to-image translation, super-resolution, and inpainting.

- Natural language processing: Text generation, translation, and summarization.

As the field of generative AI continues to evolve, GANs will likely play an increasingly important role in shaping the future of creativity and innovation across various domains.

Variational Autoencoders (VAEs)

Variational autoencoders (VAEs) are another generative model class that has gained prominence in generative AI. Introduced by Kingma and Welling in 2013, VAEs are a type of autoencoder that incorporates probabilistic modelling to generate new data samples. They are particularly well-suited for unsupervised learning tasks, where the goal is to learn a compact and meaningful representation of the input data without relying on labelled examples.

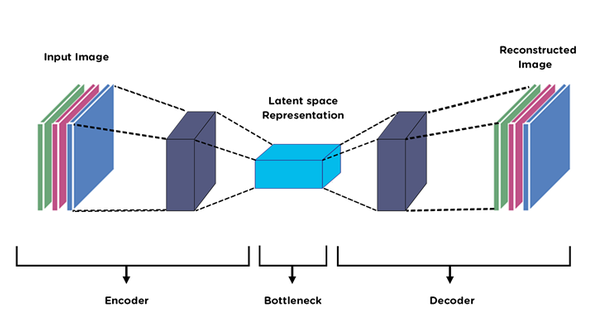

Autoencoder Structure

An autoencoder is a neural network that learns to compress input data into a lower-dimensional representation called the latent space and then reconstructs the input data from this representation. It consists of two components: an encoder and a decoder.

- Encoder: The encoder maps the input data into a lower-dimensional latent space. This process is often called encoding or compression, as it reduces the dimensionality of the input data while preserving its essential features.

- Decoder: The decoder takes the latent space representation and maps it back to the original data space, effectively reconstructing the input data. This process is referred to as decoding or decompression.

Autoencoder Architecture. Source: Medium

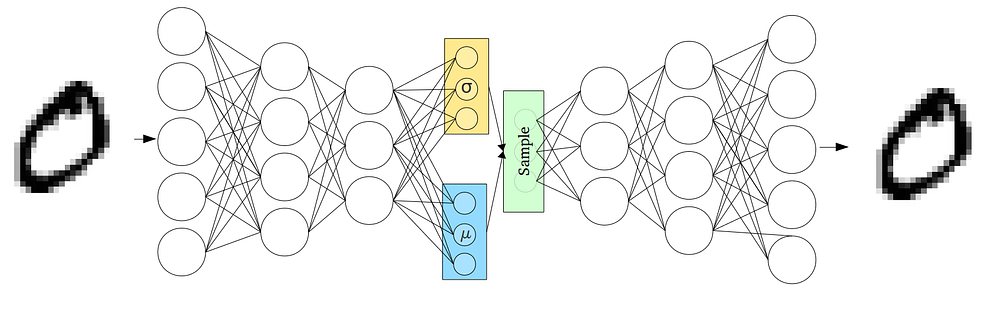

Probabilistic Modeling in VAEs

The critical difference between VAEs and other autoencoder models is how they handle the latent space representation. VAEs enforce a probabilistic constraint on the latent space, ensuring a smooth and continuous distribution of the latent variables. This is achieved by modelling the encoder’s output as a probability distribution over the latent space rather than a deterministic point estimate.

During training, VAEs optimize a variational lower bound on the data’s log-likelihood, consisting of two terms: the reconstruction loss and the KL divergence. The reconstruction loss encourages the model to reconstruct the input data accurately, while the KL divergence acts as a regularization term that enforces the probabilistic constraint on the latent space.

VAE Architecture. Source: Medium

Data Generation with VAEs

This probabilistic property of VAEs allows them to generate new samples by randomly sampling from the latent space and feeding the samples through the decoder. The smoothness of the latent space ensures that small perturbations in the latent variables result in coherent variations in the generated samples. This characteristic makes VAEs well-suited for various applications, such as generating images, text, and music, and in fields like drug discovery and materials design.

Variants and Applications

VAEs have been extended and adapted to various tasks and data types, leading to models such as conditional VAEs (cVAEs), β-VAEs, and adversarial autoencoders (AAEs). Some applications of VAEs include:

- Image generation: Creating diverse and realistic images, including faces, objects, and scenes.

- Style transfer: Modifying the style of an image while preserving its content.

- Text generation: Producing coherent and contextually relevant text.

- Drug discovery: Designing novel molecular structures with desired properties.

- Anomaly detection: Identifying unusual data samples or outliers in a dataset.

VAEs have also been combined with other generative models, such as GANs, to leverage the strengths of both architectures. For example, VAE-GANs combine the smooth latent space and reconstruction abilities of VAEs with the discriminative prowess of GANs, resulting in improved data generation capabilities.

Limitations

While VAEs have proven successful in many applications, they have some limitations. One notable limitation is the “blurriness” often observed in the generated samples. This issue arises due to the model’s focus on minimizing the reconstruction error, which can cause the VAE to generate overly smooth or averaged samples. In some cases, GANs produce sharper and more realistic samples than VAEs. However, VAEs often excel in tasks where the smoothness and continuity of the latent space are essential, such as interpolation and disentanglement.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are a class of neural networks designed specifically for processing sequential data. While they are not generative models, they can be used as components of generative models, particularly for tasks involving sequences, such as text, time series, and music. The unique architecture of RNNs allows them to effectively capture the dependencies and patterns in sequences, making them well-suited for tasks like language modelling, text generation, and sequence-to-sequence learning.

RNN Architecture

The key feature of an RNN is its recurrent connections, which allow the network to maintain a hidden state that acts as a “memory” of the previous inputs in the sequence. This invisible state enables the RNN to capture temporal dependencies and adapt its output based on the context of the input sequence.

RNN Architecture. Source: V7 Labs

RNN Architecture. Source: V7 Labs

At each time step, the RNN receives an input, updates its hidden state, and produces an output. The secret state is then passed to the next time step, allowing the RNN to accumulate information from the sequence as it processes the data.

RNN Variants

Vanilla RNNs, while effective for short sequences, can suffer from vanishing or exploding gradient problems when processing long sequences. This issue hampers their ability to capture long-range dependencies in the data. To address these limitations, researchers have developed more advanced RNN architectures, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), designed to capture long-range dependencies better and maintain a stable gradient flow.

- Long Short-Term Memory (LSTM): LSTMs introduce a sophisticated gating mechanism consisting of input, output, and forget gates. These gates control the flow of information in the network, allowing LSTMs to selectively remember and forget information based on the context. This architecture enables LSTMs to learn long-range dependencies more effectively than vanilla RNNs.

- Gated Recurrent Units (GRUs): GRUs are a simplified variant of LSTMs that use gating mechanisms but with fewer parameters. GRUs combine the input and forget gates into a single “update” gate, reducing the model complexity while still maintaining the ability to capture long-range dependencies.

Generative Applications of RNNs

RNNs, especially LSTMs and GRUs, have been widely used in generative tasks involving sequences. Some notable productive applications of RNNs include:

- Text Generation: RNNs can be used as language models to generate coherent and contextually relevant text, given an initial seed or prompt. They have been used for story generation, poetry composition, and dialogue generation.

- Music Composition: RNNs can learn the patterns and structures in musical sequences, allowing them to generate original music compositions in various styles and genres.

- Handwriting Synthesis: RNNs can generate realistic handwriting samples by learning the stroke patterns and dynamics of human handwriting.

- Video Prediction: RNNs can be utilized to predict future frames in a video sequence, generating plausible continuations of the input video.

RNNs can also be combined with other generative models, such as VAEs and GANs, to create more powerful and flexible generative systems. For example, the Sequence-to-Sequence (Seq2Seq) model combines an RNN-based encoder with an RNN-based decoder for tasks such as neural machine translation and text summarization. Variational Recurrent Autoencoders (VRNNs) and Recurrent GANs (RGANs) are other hybrid models that leverage the strengths of RNNs and different generative architectures.

Limitations and Future Directions

While RNNs, particularly LSTMs and GRUs, have been highly successful in various generative tasks, they are not without limitations. Training RNNs can be computationally expensive due to their sequential nature, which can limit the parallelism during training. Additionally, RNNs may struggle to capture long-range dependencies in the data, even with advanced architectures like LSTMs and GRUs.

Recent advances in natural language processing, such as the Transformer architecture, have shown that self-attention mechanisms can address some of these limitations, offering a more efficient and scalable alternative to RNNs for specific tasks. However, RNNs remain a valuable tool for generative AI, and ongoing research continues to explore new variants and applications of RNNs in the generative domain.

OpenAI Text-to-Image Technology

OpenAI, an AI research organization, has developed text-to-image technology that leverages the power of generative AI. Using deep learning models, OpenAI’s technology can generate high-quality images based on a given text description. This has opened up new art, design, advertising, and entertainment possibilities.

The OpenAI text-to-image technology is built on top of the generative models discussed earlier, such as GANs and VAEs. These models are trained on large datasets containing text and image data, allowing them to learn the intricate relationships between textual descriptions and visual representations.

Text-to-Image example. Source: Assemblyai

Text-to-Image example. Source: Assemblyai

Generative AI Applications

The potential applications of generative AI are vast and varied. Here are some examples of how generative AI models have already been used across different industries:

- Art and Design: AI-generated art has become popular, with artists using generative models to create unique and visually stunning pieces. Tools like DeepArt and RunwayML allow users to generate art based on specific styles or input images.

- Advertising and Marketing: Generative AI can create eye-catching promotional materials and advertisements tailored to target audiences.

- Video Games: Procedurally generated content, such as landscapes, characters, and items, can be created using generative AI techniques, adding variety and reducing development costs.

- Music Composition: Generative AI models can create original music compositions by learning patterns and structures from existing pieces. Tools like Magenta and AIVA are examples of AI-powered music composition platforms.

- Text Generation: Generative AI models can produce coherent and contextually relevant text based on a given prompt, which can be used for tasks like content creation, summarization, and translation. OpenAI’s GPT is a prime example of such a model.

- Drug Discovery: Generative models can be utilized to design novel molecules with desired properties, significantly speeding up the drug discovery process.

- Fashion: AI-generated designs can help fashion designers explore new ideas and styles and be used to predict and analyze fashion trends.

- Architecture and Urban Planning: Generative AI can be employed to design and optimize architectural structures, city layouts, and transportation systems.

The Impact of Generative AI on Society

Generative AI can revolutionize various aspects of society, from how we create and consume content to how we approach scientific research and problem-solving. As technology matures, its impact on society is becoming increasingly evident across various industries and domains.

Creativity and Art

Generative AI has opened up new possibilities in art and creativity, enabling artists and designers to collaborate with AI to create unique and innovative works. From AI-generated paintings and sculptures to music compositions and poetry, generative models have demonstrated their capacity to push the boundaries of human creativity. By automating certain aspects of the creative process, generative AI can help artists explore new styles, techniques, and ideas, leading to the emergence of novel art forms and creative expressions.

Personalization and Customization

In the age of digital media, personalization and customization have become increasingly important to enhance user experiences. Generative AI models can create personalized content tailored to individual preferences, such as custom music playlists, personalized news feeds, and targeted advertisements. This customization improves user satisfaction and allows businesses to deliver more relevant and engaging content, fostering more robust customer relationships and loyalty.

Healthcare and Medical Research

Generative AI has shown promising potential in various healthcare and medical research applications. For example, GANs and VAEs can synthesize medical images for training diagnostic models or augment existing datasets. This can help improve the accuracy and robustness of AI-based diagnostic tools. Additionally, generative models can be used to design novel drug compounds and optimize existing ones, accelerating the drug discovery process and potentially leading to more effective treatments for various diseases.

Education and Training

Generative AI can also play a role in enhancing education and training experiences. For instance, AI-generated content, such as personalized learning materials, can be tailored to individual learners’ needs and preferences, facilitating more effective and engaging learning experiences. Moreover, generative models can create realistic virtual environments and simulations for training, enabling students and professionals to practice and develop their skills in a safe and controlled setting.

Ethical and Societal Considerations

While the potential benefits of generative AI are immense, it is essential to consider this technology’s ethical and societal implications. One significant concern is the possible misuse of generative AI to create deep fakes, manipulated videos, images, or audio clips that can be difficult to distinguish from authentic content. Deep fakes can spread misinformation, harass individuals, or manipulate public opinion, raising important questions about privacy, security, and trust in the digital age.

Moreover, the increasing automation of creative tasks by generative AI may have implications for the job market and the role of human creativity. It is crucial to balance leveraging the benefits of generative AI and preserving the importance of human expertise and creativity.

The End, or Just the Beginning

Generative AI has emerged as a powerful and transformative technology, reshaping various aspects of our lives and offering unprecedented opportunities for innovation and creativity. With advancements in generative models like GANs, VAEs, and RNNs, we are witnessing the dawn of a new era in which AI-generated content, personalized experiences, and groundbreaking research applications are becoming increasingly accessible and widespread.

As we continue to push the boundaries of generative AI, it is crucial to remain mindful of the ethical and societal implications that accompany this technology. By striking a balance between harnessing the potential of generative AI and addressing its challenges, we can foster a future in which AI not only complements human creativity and expertise but also enhances our capacity for innovation and problem-solving.

The ever-evolving landscape of generative AI is a testament to the ingenuity and perseverance of researchers, engineers, and artists working together to explore new frontiers in artificial intelligence. As we look forward to the future of generative AI, let us embrace the opportunities it presents and work collectively to ensure that its benefits are realized responsibly, ethically, and sustainably for the betterment of society.

Frequently Asked Questions (FAQ)

What is generative AI?

Generative AI is a class of artificial intelligence models that can create new, original content or data based on the patterns and structures learned from a training dataset. These models can generate images, text, music, and other forms of data, leading to a wide range of creative and innovative applications.

What are some popular generative AI models?

Some popular generative AI models include Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs).

What are the main applications of generative AI?

Generative AI has many applications, including art and creativity, personalization and customization, healthcare and medical research, education and training, and more. It can be used to generate images, text, music, and other forms of data, enabling a wide variety of innovative use cases.

How do Generative Adversarial Networks (GANs) work?

GANs consist of two neural networks, a generator and a discriminator, that compete against each other. The generator creates fake samples while the discriminator distinguishes between real and fake samples. Through this adversarial process, the generator learns to generate increasingly realistic samples, and the discriminator becomes better at identifying fakes.

How can generative AI be used in healthcare?

Generative AI has multiple applications in healthcare, such as synthesizing medical images for training diagnostic models, designing novel drug compounds, and optimizing existing ones. This can lead to more accurate diagnostics and effective treatments for various diseases.

Can generative AI help in education?

Generative AI can enhance education and training experiences by creating personalized learning materials tailored to individual learners’ needs and preferences. It can also generate realistic virtual environments and simulations for training purposes, enabling students and professionals to practice and develop their skills in a safe and controlled setting.

What is the role of Recurrent Neural Networks (RNNs) in generative AI?

RNNs are a class of neural networks designed for processing sequential data. They can be used as components of generative models for tasks involving sequences, such as text, time series, and music, effectively capturing dependencies and patterns in the data.

Can generative AI models be combined for improved performance?

Yes, generative AI models can be combined to leverage the strengths of different architectures. Examples include VAE-GANs, which combine the smooth latent space and reconstruction abilities of VAEs with the discriminative prowess of GANs, and Sequence-to-Sequence (Seq2Seq) models that combine RNN-based encoders and decoders for tasks like neural machine translation and text summarization.

Do you agree or disagree with anything said in this article? Be sure to share in the comment section below.

Comments ()